ARCHES - Accessible Resources for Cultural Heritage EcoSystems war ein EU-gefördertes Horizon2020-Projekt, das von VRVis koordiniert wurde.

Barrierefreier Zugang zu Kunst und Museen für blinde und sehbehinderte Menschen durch 3D-Technologie.

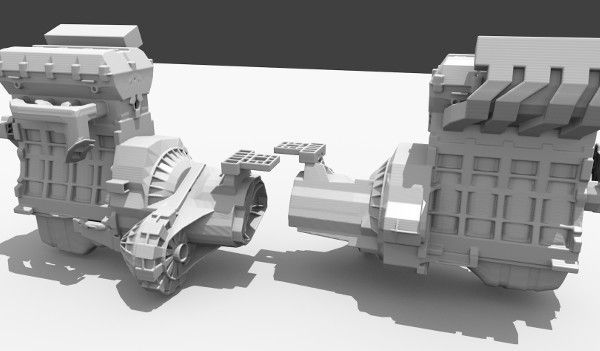

Mit Augmented Reality werden Simulationsergebnisse von Automotorgeräuschen sichtbar.